Orchestrating and Freeing Agents on a Saturday Night

Yes, it's Saturday night. And yes, I'm spending it tinkering with different agent implementations. No, this is not for work.

With all of that out of the way, I've been seriously interested in building two types of AI applications:

- A strictly orchestrated agent workflow

- A "liberated agent," free to loop and tool call away.

I have a task in mind that works really well for both use cases.

Create a custom newsletter from websites that I frequently scan for articles

Let's see what happens!

Orchestrated vs Free Agents

A task like writing a newsletter can be broken down into a series of subtasks. The workflow for writing a newsletter if I were to do it myself would be something like this:

- I skim a website like HackerNews, reading the post titles

- I click on links that interest me to read more

- I write a summary of articles that I like

- I pick a theme that ties together the articles I pick for the newsletter

It's not hard to imagine these steps written in code, especially with LLMs to structure unstructured data.

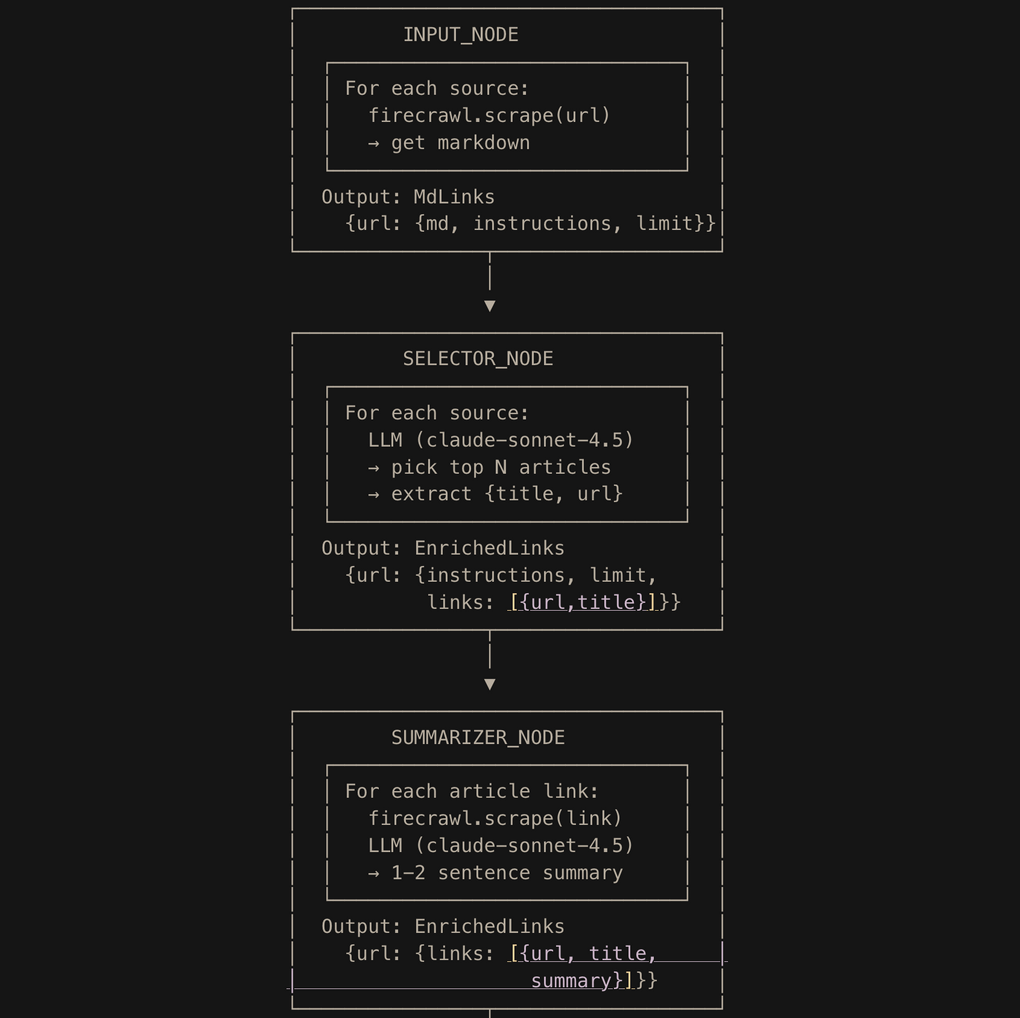

The Orchestrated Agent

An orchestrated agent is one where I define the agent's workflow explicitly. Every step (or node in the workflow graph) runs either

- code to call APIs like Firecrawl and re-structure data

- an LLM step to generate text or generate structured data

My orchestration framework was inspired by the likes of ai-sdk's workflows, LangChain's LangGraph, and my operations research classes. (I miss the good old Simplex Method and Min-Cut Flow Algorithm, but I digress.)

The LLM capability to "generate structure data aspect" is truly magical. It's a bridge that lets us take text and extract the information to a structured schema with type safety for further code steps (bridging code together, impossible without language models).

The Liberated Free Agent

The free agent is an agent that's given a harness with a runtime, tools, prompts, and filesystem, and just loops until task completion. I used LangChain's deepagents because it comes with a pre-configured harness for working with subagents and filesystem tools.

I enforced some limits to not burn through my Firecrawl and Anthropic credits, but besides that, this agent was born to go as deep as it wanted to with the deepagent harness and as far as it wanted in any direction.

Who Writes a Better Newsletter? The Structured or the Creative?

I gave both the agents similar input prompts. For the orchestrated agent:

For the free agent:

Please write a newsletter with 2 articles from hackernews.com and 2 from theringer.com.

For tech, I like articles with AI news, typescript, golang, architecture, product tastes, and game dev.

For sports, I enjoy reading mostly about the NBA, player features, and unusual trends in any sport really.

Here are both newsletters so you can judge for yourself!

Curated Newsletter by the Structured Graph Agent

Curated Newsletter by the Freelancing DeepAgent

I personally like the free agent newsletter a bit better. Perhaps it was my prompts in some of the bridge LLM nodes, but the orchestrated agent's newsletter was wordier and more click-baity.

Self-reflecting, I was also a bit more excited for the free agent's newsletter, because I didn't know what it would write. Whereas in the orchestrated workflow, because I wrote every step, I knew the exact newsletter structure, ruining a bit of the excitement and unknown factor.

Agent Design Implications

This was a fun exercise building agents with two different design philosophies.

My overall takeaways are actually not performance based (I think both agents did great, and the orchestrated graph agent is easier to tune):

1. Better models and better harnesses result in agents capable of running longer to complete even complex tasks

Breaking down tasks to essentially creating their own workflows with "todo" tools, writing large text in files to save context, reading files from a filesystem locally for low-latency RAG - these are all examples of better tooling for agents.

2. Orchestrating is like doing the reasoning for the agent

Better models and harnesses means building a world where agents can reason out even complex tasks. But what I didn't mention from running the sandboxed deepagent is that the token and cost usage was astronomically higher than the orchestrated agent. The free agent cost ~2 dollars to run this prompt a few times as I tested the tooling. Controlling the exact inputs for the orchestrated agent was extremely token efficient and took much fewer LLM turns.

3. Gain control, sacrifice flexibility

Code has always been a way to express our thoughts in a very direct way. You can misconstrue words, but code is interpreted the same way every time. It can be powerful to control every LLM call, put in exact inputs, get exact outputs. But the orchestrated agent can really only do one thing. Take that same orchestrated graph agent and force it to proofread articles, and it will fail miserably. Free agents have harnesses that are universally useful. Prompts are its scripts. Prompts are its code. Sometimes that code will be misinterpreted, but it's easier to iterate on a prompt than iterate on code (even with coding agents).

What's still interesting to debate is if it's easier to evaluate and iterate on orchestrated agents or free-running agents. Writing a new prompt for a new use case will be easier and faster than creating a new orchestration flow, but the tracing, the tuning, and the path to production may be faster with orchestrated agents. Maybe?

That'll be a question for another Saturday night.

:wq

-Jack